When I left off my last discussin of tboot PCR calculations I gave a quick intro but little more. In this post I’ll go into details for calculating the first of them: PCR[17].

There have been a number of discussions with regard to calculating or verifying PCR values on the tboot-devel mailing list and they were extremely useful in writing this code and post. These all fell a bit short of what I wanted to accomplish in that all approaches extracted hashes from the output of the txt-stat program (the tboot log) and used those hashes to re-construct the PCR values. I wanted to construct all hashes manually, to measure and account for the actual things that TXT and tboot were measuring and storing into the PCRs and to do this independent of an actual measured launch. Basically this translates to isolating the things being measured, extracting them (if possible) and use them to reconstruct the PCR value on any system, like a build server or an external verifying party.

The process is pretty straight forward, though time consuming, and the specification is phrased in such a way as to force some guess and check. There’s even a bit of a trick in the end which requires that we go digging around in the tboot source code which is always fun. I’ll also present a bit of code that will automate the calculation for you so if you’re anxious and don’t want to read any more you can go straight to the code which can be found here: .

DISCLAIMER: The code in the pcr-cal git repo is very much a work-in-progress and should be considered unstable at best so YMMV.

The spec that defines the DRTM specific PCRs is the “PC Client Implementation for BIOS”. These are PCRs 17 through 20. Their individual use however is hardware specific and on Intel hardware, the definitive source of data on what gets extended into which of these PCRs as part of establishing a DRTM is a document titled “Intel® Trusted Execution Technology (Intel® TXT) Software Development Guide: Measured Launched Environment Developer’s Guide”.

Quite a mouth full. Anyways section 1.9.1 covers PCR[17] but the details of what various bits are measured are spread out over the document. A default tboot configuration will cause 3 extends to this PCR so we’ll break this post up into 3 sections, each one describing the hashes that go into the 3 consecutive extend operations.

First extend: SINIT ACM

The first thing that’s extended into PCR[17] is the hash of the SINIT ACM. This is a binary blob that Intel ships which is used by tboot to establish the DRTM on a platform. The binary code in the ACM is chipset specific so there are a number of ACMs out there to chose from. tboot automates the process so if you’re unsure which ACM is the right one for your platform you can configure your bootloader to load every ACM and tboot will pick the right one. This will slow your boot process down considerably though and selecting the proper one isn’t hard with a bit of reading so don’t be lazy.

With the right ACM in hand you’d think it would be a simple matter of calculating the sha1 hash of the file and extending that into PCR[17]. That’s not the case though. There are two little details that must be sorted first.

Depending on the version of the ACM you’re using the hash algorithm may be sha1 or it may be sha256. ACMs version 7 or later will use sha256, while earlier versions will use sha1. The current version of the ACM format is 8 so most modern hardware will need a sha256 hash (not to mention that most OEM implementations of TXT 3 years or older never worked in the first place … snap!).

Further, there are some fileds in the ACM that aren’t included in the hash. The logic behind this escapes me but the apendix A.1.2 specifies that some fields are omitted. Quoting the spec: “Those parts of the module header not included are: the RSA Signature, the public key, and the scratch field.” That sounds like 3 fields from the ACM right? Wrong: there’s a 4th field omitted as well and that’s the RSA exponent. I guess they meant for the exponent to be included in the definition of “public key”? Thanks for being explicit.

Anyways omit the fields: RSAPubKey, RSAPubExp, RSASig and the Scratch space, got it. To omit these fields from the hash we’ve gotta parse the ACM. I’ll cover this code at the end.

Finally the 32 bits that make up the EDX register which hold the flags passed to the GETSEC[SENTER] instruction are appended to the hash of the ACM. We represent PCR[17] at the first extend operation thusly:

PCR[17]_1 = Extend(PCR[17]_0 | SHA256 (ACM | EDX))

where PCR[17]_0 is the state of PCR[17] at time = 0. PCRs are initialized to 20bytes of 0’s so PCR[17]_0 is 20 bytes of 0’s.

Second Extend: Heap Data

The second extend to PCR[17] includes various bits of data from the TXT heap. Appendix C describes the TXT heap as a contiguous region of memory set asside by the BIOS for use by ‘system software’ (aka BIOS) to pass data to the SINIT ACM and the MLE. PCR[17] is extended with the sha1 hash of either 6 or 7 concatenated pieces of data depending on the version of the ACM. The following fields are concatenated together and their sha1 hash is extended into PCR[17] for the second extend:

- BiosAcmId

- MsegValid

- StmHash

- PolicyControl

- LcpPolicyHash

- OsSinitCaps or 4 bytes of 0’s as specified by the LCP (more on this next)

If the SINIT to MLE data table version is 8 or greater an additional 4 bytes are appended representing the processor S-CRTM status. These 4 bytes are in the ProcScrtmStatus field in the SINIT to MLE data table.

The second extend to PCR[17] could be represented as follows for SINIT to MLE data table versions < 8:

PCR[17]_2 = sha1 (PCR[17]_1 | sha1 ( SinitMleData.BiosAcm.ID | SinitMleData.MsegValid |

SinitMleData.StmHash | SinitMleData.PolicyControl |

SinitMleData.LcpPolicyHash | (OsSinitData.Capabilities, 0)))

For SINIT to MLE data table versions >= 8:

PCR[17]_2 = sha1 (PCR[17]_1 | sha1 ( SinitMleData.BiosAcm.ID | SinitMleData.MsegValid |

SinitMleData.StmHash | SinitMleData.PolicyControl |

SinitMleData.LcpPolicyHash | (OsSinitData.Capabilities, 0) |

SinitMleData.ProcessorSCRTMStatus))

where we use the notation (OSSinitData.Capabilities, 0) to represent a choce made between appending the value of OsSinitData.Capabilities or 4 bytes of 0’s depending on the state of the LCP policy control field.

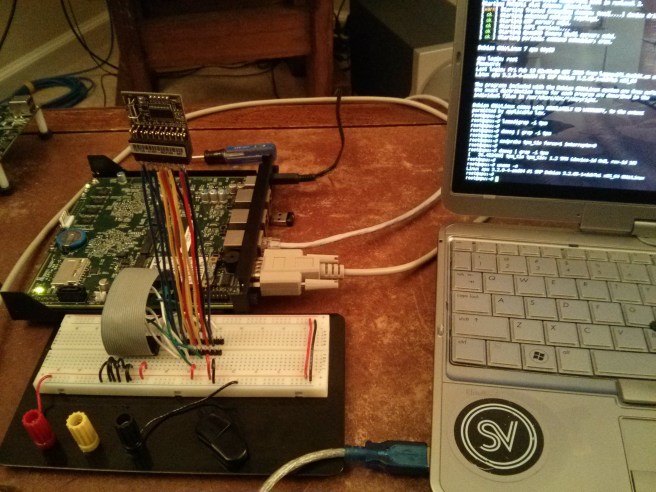

The astute reader is likely wondering: “How do I get these values out of the TXT heap … and where do I even get the TXT heap from?” Both are very good questions. Getting at the TXT heap isn’t too difficult. You’re on a Linux system presumably with root access. The TXT heap is a region of memory like any other and you only need to know the offset where it resides and how to determine it’s size. Both the offset and the heap size are obtained from the TXT public registers which are mapped to well known memory addresses (read the spec if you’re really interested).

In the git repo linked above I’ve written a simple utility to parse and output the TXT Heap: txt-heapdump. You can run this utility to display the contents of the heap on the system you wish to calcuclate PCR[17] in a human readable form:

$ txt-heapdump --mmap --pretty

You can also use it to obtain the heap as a binary file:

$ txt-heapdump --mmap > txtheap.bin

You can then parse the binary file to display the heap in a human readable form and it’ll look just like it did coming straight from /dev/mem::

$ txt-heapdump --pretty -i txtheap.bin

Once you have the heap as a file you can use the pcr-calc library to parse and extract various bits. Again, I’ll present the utility that does it all for you at the end. But first, the third and final extend …

Third Extend: Launch Control Policy (LCP)

You’d expect that all of the values that are hashed as part of PCR[17] are discussed in the spec under section “PCR 17” … and you’d be wrong. A couple of sections deeper where the LCP is discussed, you’ll find a description of how the LCP policy is measured and it turns out that this measurement gets extended into PCR[17] as well! There are a number of rules laid out in this section for how the system behaves when there’s no ‘Supplier’ or ‘Owner’ LCP present. Specifically the spec states:

As a matter of integrity, the LCP_POLICY::PolicyControl field will always be extended into PCR 17. If an Owner policy exists, its PolicyControl field will be extended; otherwise the Supplier policy’s will be. If there are no policies, 32 bits of 0s will be extended.

I’ve not gone through this section with a very thorough eye so I’m not an authority here, but tboot seems to ignore these rules and instead loads a default policy when there isn’t one in the TPM NV RAM. Not saying this is good or bad, right or wrong, just pointing out that this is what tboot does and it was something that I had to figure out in order to calculate the value of PCR[17] independently on my test systems.

So my goal here is to calculate PCR values. If your system is like mine and both you (the ‘Owner’) and the ‘Supplier’ (your OEM) didn’t provide an LCP, how do we measure the default policy from tboot? The only thing I could come up with is to pull apart the tboot code and copy the hard-coded structures into a C program and then dump them to disk in binary. The hash of this file is the one we need to extend into PCR[17] along with the LCP PolicyControl value. I’ve added a class to the pcr-calc library to parse the necessary parts of the binary LCP to support this operation.

The program that dumps the binary LCP from tboot is: lcp_def I’ve kept this utility in the pcr-calc project to reproduce the LCP on demand. I considered only keeping around the LCP binary in a data file but in the event that the default tboot policy changes in the future I wanted to keep the program around to dump the binary structures. When executed this program just dumps the binary policy so you’ll have to redirect the output:

$ lcp_def > lcp.bin

Final PCR[17] Calculation

Now that we’ve figured out how to do all three independent extend operations and we’ve collected the heap and LCP blobs, we can calculate the final state of PCR[17]. I’ve automated this in the program: pcr17 (very creative name I know). Assuming your heap is in txtheap.bin and your LCP is in lcp.bin your SINIT ACM file is named sinit.acm you should invoke the program as follows:

$ pcr17 -i txtheap.bin -l lcp.bin sinit.acm

Your output should look something like this:

$ ./bin/pcr17 -i ../txt-data/txtheap.bin -l ../txt-data/lcp_def.bin ../3rd_gen_i5_i7_SINIT_51.BIN

first extend: SINIT ACM hash

extending with: 0fcc099f81549da4836d492afb8ab2e303cecfa1

PCR[17] before extend: 0000000000000000000000000000000000000000

PCR[17] after extend: 8d3dd5c8e795dfac5dbfa9859310b2bcea36d347

second extend: TXT heap data

append BiosAcmId:

8000 0000 2010 1022 0000 b001 ffff ffff

ffff ffff

append MsegValid_Bytes:

0000 0000 0000 0000

append StmHash:

0000 0000 0000 0000 0000 0000 0000 0000

0000 0000

append PolicyControl_Bytes:

0000 0000

append LcpPolicyHash:

0000 0000 0000 0000 0000 0000 0000 0000

0000 0000

append Capabilities_Bytes: False

Hashing 4 bytes of 0s in place of OsSinit.Capabilities

append ProcScrtmStatus_Bytes:

0000 0000

extending with: 7e0cdad3b8d9c344ab89657efdbfa638d1b25978

PCR[17] before extend: 8d3dd5c8e795dfac5dbfa9859310b2bcea36d347

PCR[17]: bfa4421b49f6ab899157ba6ee8fec3c5c5abf4ab

third extend: LCP

lcp hash: ab41624e7d71f068d48e1c2f43e616bf40671c39

polctrl: 1

extending with: 9704353630674bfe21b86b64a7b0f99c297cf902

PCR[17] before extend: bfa4421b49f6ab899157ba6ee8fec3c5c5abf4ab

PCR[17] after extend: 57a5f1b245ac52614498a728efe7f741b4dc3ebf

PCR[17] final: 57a5f1b245ac52614498a728efe7f741b4dc3ebf

Currently the program will dump the hashes and PCR states after each extend along with the actual sha1 that should be in PCR[17] after a successful TXT measured launch. Take a look at the code if you’re interested in the details. This was as much an exercise for me in learning a bit of python as it was about the actual end result. If given the choice again I’d have implemented this in C just because it’s much easier to deal with binary values and memory ranges in C than in Python. Then again this may just be that I know C better than I know Python so YMMV.

Conclusion

As you’ve probably noticed I was only partially successful in my goal. All of the data from the TXT heap that are extended in to PCR[17] are themselves hashes of things we can’t access. Most of these hashes are all 0’s though denoting that the BIOS implementer opted out of implementing that feature (you’ll have an STM on your system one of these days but don’t hold your breath). The only one that’s actually present is the BiosAcmId but I’d expect in the future for the other fields to be populated as well.

This is just another instance of binary blobs making their way into the TCB of our software systems. We’ve had to deal with these in various forms over the years: binary drivers, firmware and BIOS code. Intel and other chip manufacturers have been making their hardware extensible using firmware and microcode for a while now so it’s no surprise that these things have made their way into the TCB. The good news is that they’re being measured and even if we can’t get our hands on the code, or even the binary blob on account of it being embedded in some piece of hardware, we can still identify them by their hash. The implications for trust aren’t great but it’s a start.